Cloud computer and AI in digital twins

Nuclear waste clean-up steps up a gear

Project facts

- ClientWashington River Protection Solutions (WRPS)

- LocationRichland, Washington State, USA

- ChallengeWith 56 million gallons of nuclear waste to clean up, WRPS needed quicker ways to speed up complex data analysis with their predictive simulation capabilities.

- SolutionWorking with Twinn’s simulation and digital twin experts, artificial intelligence (AI) and machine learning was used to automate data analysis and an App enabling cloud-based simulation was developed to boost modelling efficiency.

- ImpactWhereas once it used to take 20 - 25 working days to answer a standard 80-scenario question – now it takes just 2 - 4 days. This has fundamentally changed how the team uses their digital twins, boosting efficiency and reducing human error.

Washington River Protection Solutions (WRPS) is contracted by the US Department of Energy to manage clean-up of the Hanford site, a 586-square-mile area with 56 million gallons of nuclear waste. Their mission is to reduce the time associated with clean-up, given that hoteling and operation costs add up to millions of dollars a day.

The challenge

A story of collaboration, iteration and innovation

Since 2016, Twinn, formerly known as Lanner, has worked as part of the extended WRPS Mission Analysis Engineering Team. Together, we’ve put the operation at the vanguard of predictive simulation, using digital twins to support safe, efficient clean-up.

The first phase of our partnership was to create a digital twin ecosystem. The initial models, developed using our Witness Horizon predictive simulation software, have helped uncover previously unknown bottlenecks and direct millions of dollars of investment more efficiently.

The second phase of collaboration has focused on evolving WRPS’ digital twin capabilities to facilitate better, faster decision making.

Finding new ways to speed up data analysis

Stakeholders within WRPS and the Department of Energy were increasingly impressed with predictive simulation – and there was rising demand for modelling to be integrated into decision-making processes.

The team was asked to answer extremely complex questions. A single query about improving throughput could easily involve 80 scenarios. It took hours to prepare and check a vast number of input files, days of computing time to run scenarios, and additional days to analyse the large data volumes. One set of experimentation could easily take a month to inform a decision.

The WRPS Mission Analysis Engineering Team and Twinn wanted to find innovative ways to speed up this process.

The modelling is changing hearts and minds. Our team has doubled in size this year, and our achievements are testament to the strong collaboration internally and with Twinn.

The solution

Our approach: App enabling cloud-based simulation and artificial intelligence/machine learning

Together, we looked at 2 ways to boost modelling efficiency: reducing compute time and speeding up analysis. This led to a 2-pronged approach:

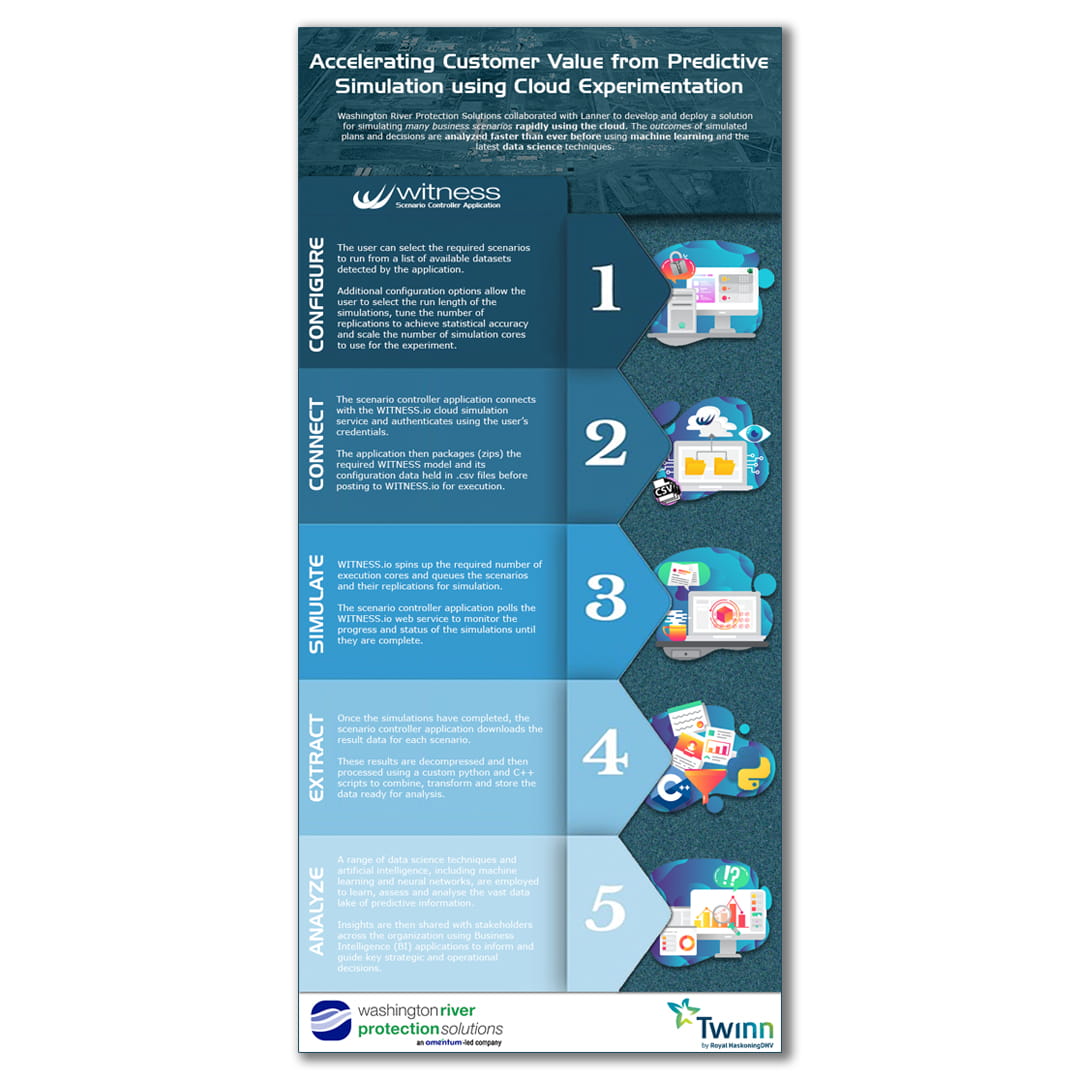

- Developing an app enabling cloud-based experimentation – giving the team more power and velocity to run what-if scenarios

- Using artificial intelligence (AI) and machine learning – to automate data analysis

We developed the bespoke app collaboratively using Witness.io, Twinn’s web service for offering scalable simulation performance to power mass experimentation. Using the cloud can drastically reduce overall computing time because many scenarios can be executed in parallel using dedicated simulation cores. It also boosts flexibility and enhances business continuity. This was crucial during the Covid-19 pandemic because the team were able to access the experimentation service remotely. Plus, it’s easy to scale capabilities as the digital twin ecosystem expands.

The AI and machine learning elements were developed in partnership with data science specialists from Royal HaskoningDHV. The team is now using AI and machine learning to analyse simulation outcomes and identify possible improvements via automatic bottleneck detection. They’re also working on ways to speed up and extend simulations with surrogate models based on fast AI approximations.

This is accelerating the iterative experimentation process, helping home in on blind spots and answer questions more quickly.

The impact

Data-driven answers to complex questions, on demand

The increasing use of predictive simulation has helped WRPS get data-driven answers to complex questions. Thanks to cloud computing, AI and machine learning, the team can now deliver those answers on demand.It used to take 20-25 working days to answer a standard 80-scenario question – now it takes just 2-4 days. This has fundamentally changed how the team uses their digital twins, boosting efficiency and reducing human error.

As one WRPS team member said: “We’re able to answer questions we didn’t think we’d be able to answer. It used to take hours to make changes to all the input files, but now it’s click and done. It’s quick to run the models, gather outputs and to do the analysis. And there’s no effort in data management, which is where there’s most risk of human error – it’s just done. These innovations have enabled us to use the models the way we’d envisioned.”

One example of the innovation in action was with waste retrieval. Part of the clean-up process involves retrieving tanks containing processed waste. The team was able to run 100s of scenarios and answer critical questions in less than 2 weeks – a process that would have taken days’ worth of spreadsheet cutting and pasting alone, followed by weeks of data analysis. The WRPS Operations Team received granular insight on how to plan the next stage, with clear guidance on how tank volumes would affect timescales. There are hefty fines for missing deadlines, and the quick, detailed analysis helped facilitate safe, on-time retrieval.

Another example related to proposed investment designed to boost throughput at the effluent treatment facility. The modelling quickly revealed an unknown bottleneck in the filtration system. Without addressing it, they would have missed the new throughput target by 75% after spending millions. Thanks to the predictive simulation, the filtration system is being updated and the investment is on track to deliver the required return.

“The modelling is changing hearts and minds,” said another WRPS team member. “Our team has doubled in size this year, and our achievements are testament to the strong collaboration internally and with Lanner (now Twinn). It’s not just our colleagues at WRPS who increasingly rely on modelling to progress our mission. The Department of Energy has also asked us to lead training sessions – and is using us as an example of how predictive simulation should inform decision making.”